The Gulf of Execution

Bridge or Chauffeur?

One of my favourite textbooks is from my Human-Computer Interaction (HCI) class at university: "The Design of Everyday Things" by Don Norman. It was truly a fascinating and enlightening read - all the better for it being required reading ☺

I even still have my copy more than two decades later:

The book is a foundation work in user interface design and probably needs no introduction for anyone working in software, especially so those working on UI and UX design. It covers how humans hold goals and how we can design the interface of a system to better facilitate the achievement of those goals. The book is replete with examples of how bad design can confound the human mind and actually make achieving a goal harder. One of my favourite examples is the mismatched use of "push plates" and "pull handles" on doors.

The two key concepts introduced in the book are the 'Gulf of Execution' and the 'Gulf of Interpretation', loosely representing the cognitive distance between achieving what you what and understanding that you are on the way to achieving it. In this article I want to talk about the gulf of execution, which is described in the book as:

"The difference between the [user's] intentions and

the [system's] allowable actions...

One measure of this gulf is how well the system allows the person to

do the intended actions directly, with-out extra effort..."

How Far We Have Come

Since the book was originally published in 1989, a great deal of further research, development and experimentation has been done on human-computer interaction. The overall user experience on offer of today is, in general, far superior. In fact, with both the processing power and the multitude of the digital devices available to us these days, we can easily say that they are worlds apart. And given that our devices are now all interconnected, and applications tend to be backed by some cloud-based service, any one interface really only embodies the device-specific component of a far wider user experience and overall journey.But the gulf of execution doesn't specifically talk about technology, or experience, or journey. It talks about "how directly can I get done what I want to get done." Therea re still gadgets that require some mental gymnastics to operate. The cheap camera I got for my son when he was a toddler is a good example. Apart from the fact that it has games installed on it for some reason, the other functions, while being quite in line for what one would expect of a camera, are assigned to functional buttons which are entirely confusing.

In the example of the camera, since the screen has no touch function, I am restricted to the few tactile buttons which remain, and how the designers chose to assign their functions. It's clear this was not a deeply considered exercise. Conversely, on a modern smart phone, say an iPhone since Don Norman was a Fellow at Apple and the first with a job tittle including "user experience", such tactile buttons are still minimal but are assigned to the functions which apply across almost any situation: "be quiet!"/"I can't hear you!" and "go away"... I believe the technical names are some versions of "Sleep" and "volume". Of course, overloading of those functions can be clearly identified in official apps, such as the "double click to install" case. The point is that though that goes into that design goes a long way.

Getting To the Heart of the Matter

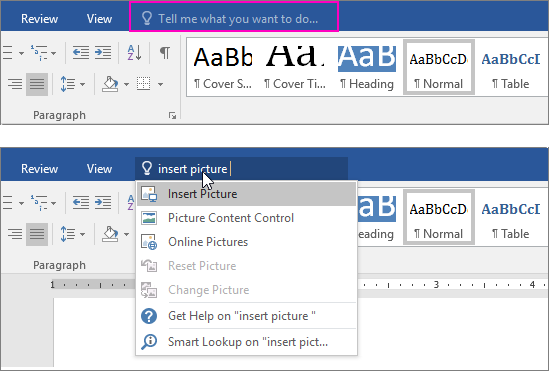

Of course, we can't have a button for everything. The closest thing we have had for a while is the ability to search directly for a function. Sticking with Apple for a moment, on macs and iPhone, the most common apps and settings that we need can be reached via spotlight. On Windows, a similar feature is on the start bar and even office has the "Tell me.." search box, for every time you know exactly which of the 500 features you need right now!

However, this still has a cognitive gulf in that we need to remember the name of the function we want. Otherwise, it's an extra step (several actually) for a quick Google to find out!

The New Kid in Town

I use ChatGPT almost daily. From obscure coding syntax to the etymology of words, for many goals in information retrieval it is reducing the gulf of execution to nearly zero. I just say what I want to know, and I usually get it. Or, I spend the next 20 minutes trying to explain it, but that's the minority of cases. Honest.

Now, you might say "ah, but there is still a cognitive effort of trying to get the model to understand you". I would say yes... but mostly no. There are some cases where the model just doesn't get it, but in my experience with the latest iterations these are becoming vanishingly small. All the same, what I found that paid off most was making sure I was expressing my goals clearly. Take for example that when talking with familiar persons, even in a community, we can often take shortcuts in our use of language. But go across country, talk to the new grad, work with overseas visitors and you will find yourself paying a lot more attention to what you are saying. So, I would argue that no, this is not a cognitive load in achieving the goal, it is the necessary cognitive effort to be unambiguous about your goal. That's on you.

So what's the point? Well, there are two points I which I which to convey.

Using ChatGPT has shown us the future of the level at which we can interact with systems. When using large language model (LLM) technology, on which ChatGPT, Claude, Gemini and others are based, systems can actually interpret (I very purposefully do not say understand) our goals directly from natural language. Insofar as the system affords achievement of the goal, agents can communicate amongst themselves to achieve that goal. In fact, it is exactly that ability for a model to understand the user's natural language, and then co-operate with other models (as agents) in the same natural language which enables the reduction of the gulf so far. Note that instructions for LLM agents are also defined in natural language. This means that in principle the cognitive workload is offloaded directly to the system, which has been directly instructed by the system designer in exactly the same natural language of the user.

"Get me to my goal, and step on it!"

I smile sometimes when I think how magnificently impatient LLMs have made me towards systems with traditional UIs. My favourite is when I know there is that one setting to be changed, and the hunt is on... and now the amplified frustration too! 😂

LangChain and similar libraries are wonderful when the interface of the system is primarily language based. Like an automated advisor, booking agent, etc. Don't get me wrong, the level of automation which has been achieved with these technologies and function calling, RAG, etc. is mind-blowing. But these don't help me with my UI-based app. What about those?

Microsoft famously made their announcement for Copilot 365, the integration of LLM technology with their 365 suite of applications, aiming to implement exactly what we've been discussing here.

Apple has also recently announced research on a quite aptly named Ferret-UI multi-modal LLM. This UI is designed to see and interpret the UI of screens, attempting to match the user's goal with the UI's design. For systems which were not design with LLMs, this is clearly a desirable feature.

But both of these are massive corporations with the cash to literally make their own models. What about the rest of us? We have access to GPTs, but how can we integrate these to our apps? One excellent project I have seen is CopilotKit, a library, and also a cloud service, which aims to allow you to easily add copilot power to your web app. While most of the examples I see are backed by the larger online models like Claude or GPT4, I note that Google is now testing Gemini Nano inside Chrome. So for now, it seems like the gulf of execution, induced by the necessity of interfacing a computer system with a human, is becoming ever narrower. It's quite profound that we are now able to interface with and even design systems in natural language itself. No need for even a user manual if that is encoded into the very system it is intended to describe. I could go on further about the merging of design and execution, but I will leave it here and say that I am overwhelmingly excited about the possibilities in the near term. I expect that the effort being put into copilots will only increase and spread.

No more fishing through countless menus or trying to figure out where the hid the hamburger menu this time. Just talk to the co-pilot and have it drive you across that gulf!